Music Education Interface (MEI) is an educational web application that supports users in mastering musical competencies. Instrumental pedagogy expects learners to build fundamental technical skills (e.g., rhythm, fingering, intonation, dynamics, articulation, timbre) so their musical ideas are not limited by technique. Metronomes and tuners help with rhythm and pitch, but other skills still lack focused technological support. MEI addresses this gap with dedicated modules for different competencies. The app facilitates learning through guided repetition, structured reflection and multi-sensory engagement, addressing critical technical and auditory skills.

Fingering fundamentals

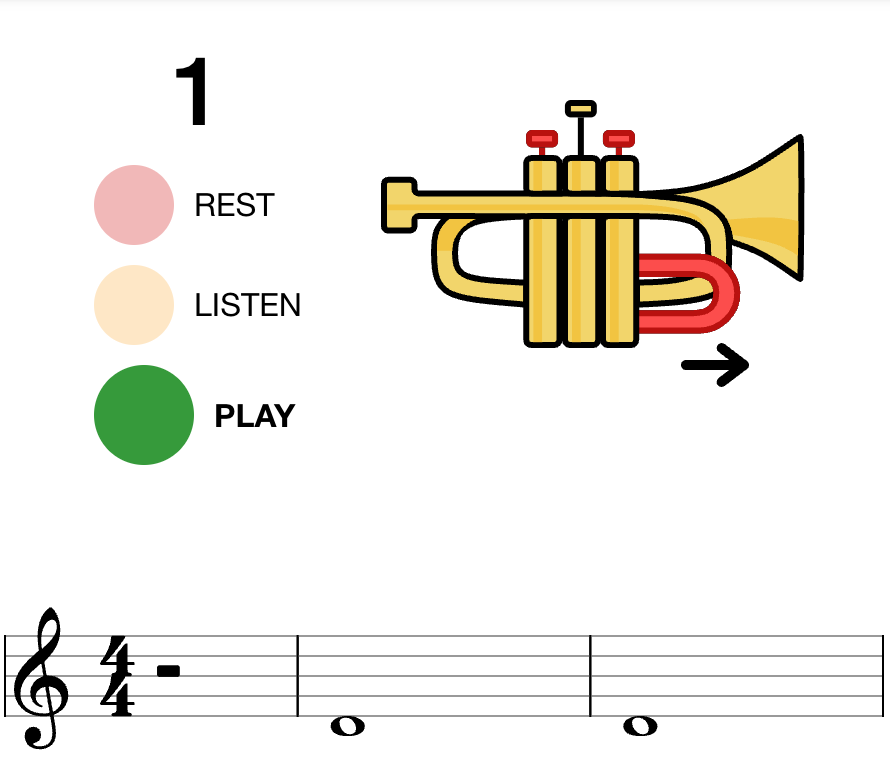

The fingering module helps learners connect what they see, hear and play: a note on the staff, its name, pitch and corresponding fingering are presented in sequence. Each sequence in the exercise shows the fingering next to the notation, pairs it with aural cues and cycles through rest → reference → play so students can internalize those associations at a steady tempo.

By allowing students to set custom parameters, MEI adapts to different skill levels, creating a personalized learning experience. Starting with a beginner-friendly interface, users can customize their practice by selecting:

- ⏰ Metronome Speed: Adjust the tempo for practice.

- 🎵 Lowest Note & Highest Note: Define a custom range of notes to focus on.

The image below shows the interactive controls where students can set these parameters before starting an exercise.

Each exercise is structured in three steps:

- The first measure is a rest, allowing the student to prepare

- The second measure plays a reference note, offering an example to imitate

- The third measure is for the user to play the note

On the top of the screen, an instrument-specific fingering chart is displayed so students can match notes on the staff to the correct fingerings. The current implementation supports clarinet, oboe and trumpet.

MEI is also available as a free beta version for iOS and Android devices:

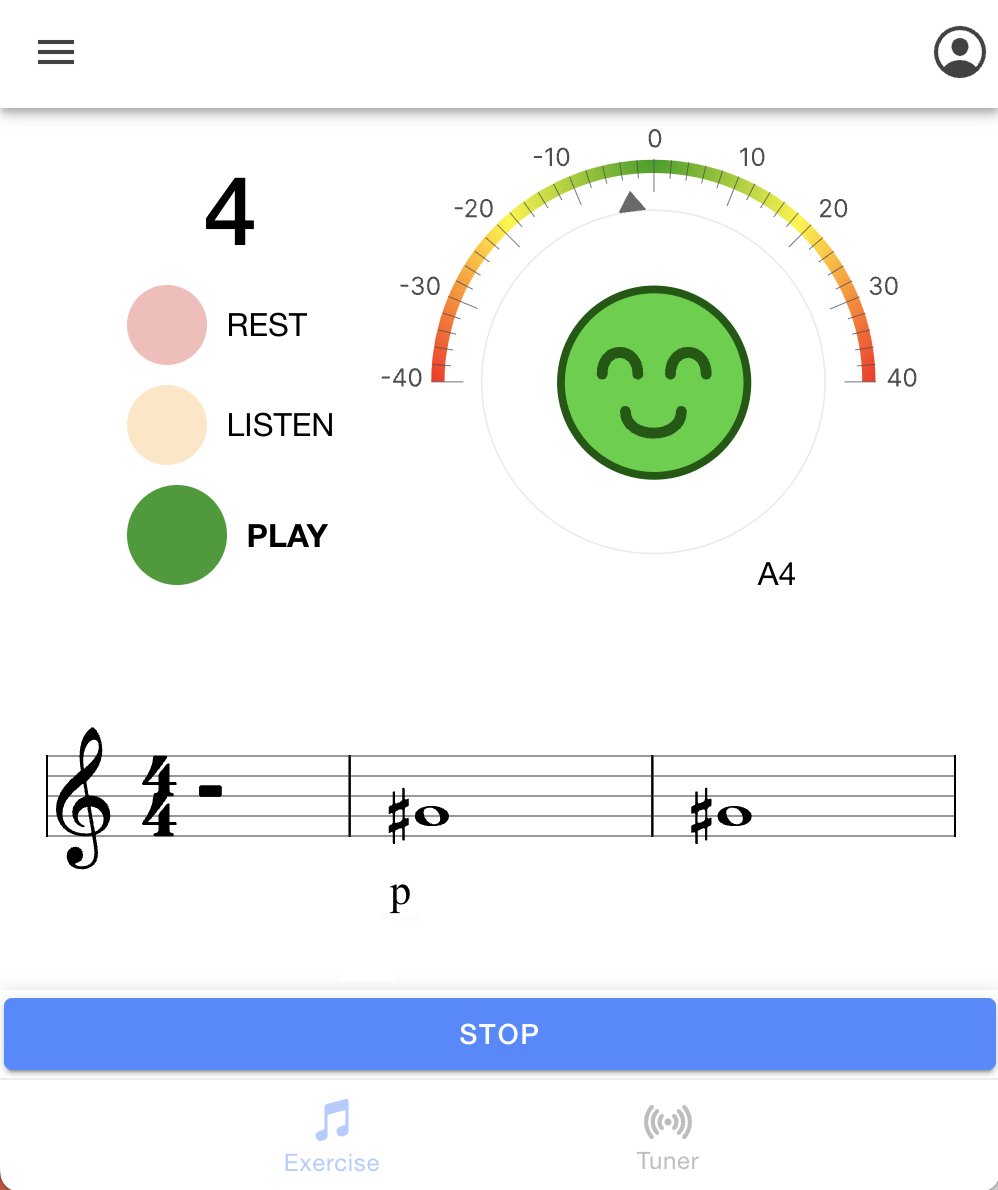

Intonation and Dynamics

Once learners can reliably play the right notes, they can focus deliberate practice on other technical skills. Adding a chromatic tuner and dynamic markings, users are encouraged to fine-tune their pitch and volume control bringing more expression and style to their playing.

The repository includes a chromatic tuner module for real-time intonation feedback, so players can hear and see how close they are to pitch. Dynamic markings are also baked into the score and mirrored in the reference sound, inviting learners to practice the same passage at different volume levels.

Articulation

A further feature addressed in the Music Education Interface is the attack clarity of a produced tone. A digital signal processing algorithm measures the attack transient duration in milliseconds, providing users with real-time feedback on their articulation clarity.

"Attack clarity is finding the purity and the best beginning of sound"

Achieving a clean and controlled attack is a fundamental skill for wind and bowed string musicians, influencing both technical precision and artistic expression. A dedicated articulation analysis tool enables learners to experiment with different attack styles (e.g., staccato, détaché, martelé) while receiving real-time feedback on the duration of their sound's attack phase. This immediate insight allows students to adjust their technique dynamically, fostering a deeper understanding of how articulation affects overall musical performance. By analyzing the transient development of their sound, students gain deeper control over articulation, allowing them to make more informed musical choices and refine their expressive capabilities.

Audio Examples!

Compare the difference between an unclear and a clean articulation:

Unclean Attack

Clean Attack

Read the full paper for details on the developed algorithm.

Timbre

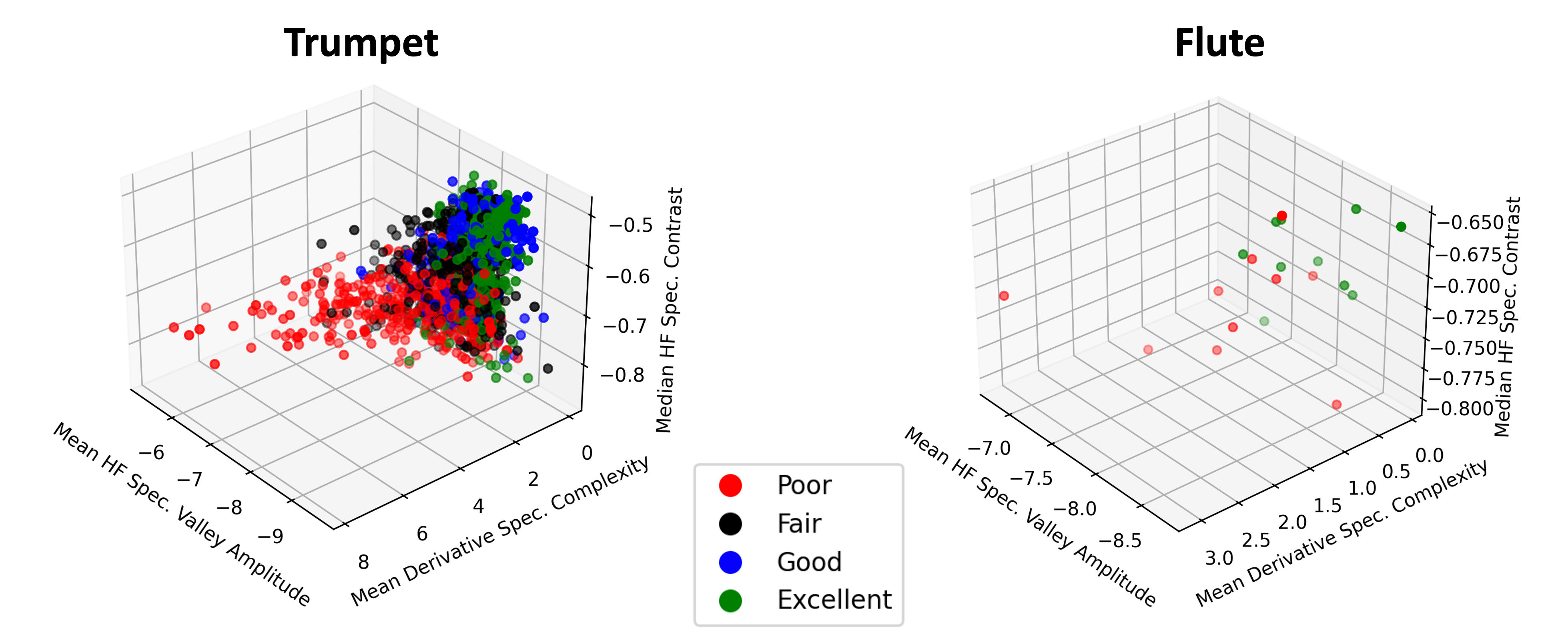

Brass pedagogy often notes that tensions in a player’s body affect their sound. In other words, timbre can reveal how efficiently a tone is produced. Building on that idea, a machine learning model for trumpet was trained on an extensive audio dataset graded by expert teachers, reaching accuracy comparable to human evaluators. The model classifies timbral quality inside MEI, adding a new layer of feedback for developing a resonant, efficient sound.

Watch the DEMO to see the timbre model inside the pedagogical app:

For trumpet, a 3D visualization of extracted spectral features maps sound efficiency in real time, from red (poor) to green (excellent). As students play, their sound traces this space, making it easy to experiment, explore timbral possibilities, possibly aiming for a resonant, efficient tone. A similar pattern is observed in a preliminary test for flute on a smaller dataset, suggesting potential cross-instrument insights that warrant further study.

See the two articles for more details: SMAC article and ISMIR article.

Roadmap

Future work focuses on expanding the current components into learner-friendly mobile apps and deepening the pedagogical tooling. The next steps include:

- 📱 Mobile-first experiences that integrate all feedback modules in a learner-friendly app.

- 🎼 Fingering indications for additional instruments to broaden the repertoire beyond trumpet.

- 🧩 New practice components to further enrich the learning experience.

- 🏫 Testing with music schools to validate usability, accessibility and learning impact.

The objective is to make music education more engaging, effective and adaptable while keeping the tools practical for real teaching contexts.

Open source orientation

MEI is released under the Affero license. Code, documentation, design processes and open access publications are shared to support transparency, reuse and long term sustainability—contributing not only a tool, but a reproducible approach to inclusive, adaptive music learning.